The AWS Blu Age modernization process follows multiple steps. First, the customer provides legacy artifacts which are analyzed during the Assessment phase. This phase helps us understand the size, the content and the potential challenges of the codebase, and prepare the next step, the calibration. The purpose of this phase is to involve the customer in an initial small project, clarifying their role in the modernization process and showcasing the capabilities of the AWS Blu Age solution, prior to modernizing the entire codebase. In the Calibration phase, we leverage the results of the Assessment to select a representative sub-scope of the full project. This allows us to discover the technical implementations, choices of design, specificities of this codebase earlier rather than throughout the AWS Blu Age modernization process. After this phase, the Mass Modernization can begin. During this phase, we modernize and migrate the entire project scope. Good choices for the calibration scope will result in a smoother mass modernization phase, reducing the overall cost and time.

What is a good calibration?

The calibration scope selection must be defined with the goal of testing the highest number of different technical functionalities of the legacy application, focusing on the previously detected challenges, using the lowest number of lines of code. To make this selection as effective as possible, it's important to consider different criteria. An optimal scope should:

- contain testable and independently runnable features.

- include some of the challenges (errors, libraries, non-sweet spot code...), and represent the technical functionalities;

- leverage the dependency analysis to include the most central legacy artifacts.

We introduce the AWS Blu Insights Calibration Scoping as an assistant, which will help define this optimal scope. The main purpose is to determine a score for each testable feature in a codebase and, taking into account the desired lines of code, suggest a range of highly scored features to be included in the calibration scope. A few metrics are computed to help accomplish this goal.

How do we compute a calibration scope?

Criteria 1: Testable and independently runnable features

The calibration scope definition relies on the outputs of the Application Entrypoints and Application Features processes. The result of the decomposition of the Application Features process provides a set of testable features upon which will be computed the metrics, and the calibration scope will be an aggregation of some of them.

Criteria 2: Technical functionalities and pain points

At a broad level, a legacy program incorporates technical functionalities from various categories such as file management, database accesses, mathematical operations, and more. To encompass different families, we propose the concept of Category and Code Pattern for file statements, which will be linked to specific families. With this Code Pattern notion, we can compute two metrics:

- Coverage: The percentage of code patterns types present in the file relative to all the code patterns types in the application features.

- Rarity: Value indicating the mean rarity of the code patterns in the file (i.e. the ratio of the number of occurrences of the code pattern in the file to the number of total occurrences of the code pattern in all the files).

When a legacy artifact cannot be modernized by the AWS Blu Age Transformation Engine, these metrics cannot be computed. This information is taken into account in the analysis, in the Error Category.

Criteria 3: Central legacy artifacts

From the dependency analysis of AWS Blu Insights Codebase, we introduce the notion of Centrality, which is a value computed from the links (inbound and outbound) between a given file and other files, influenced by its neighbors’ values. The higher the centrality is, the more central the node is on an application, and the higher the score will be for the Application Features in which it belongs.

How to run the calibration assistant

Although we tried to simplify the technical concepts behind a calibration in the previous sections, they remain complex. The good news is that as a user of AWS Blu Insights, you don’t need to understand or handle those details. The Calibration assistant automates the process.

Launch a Calibration

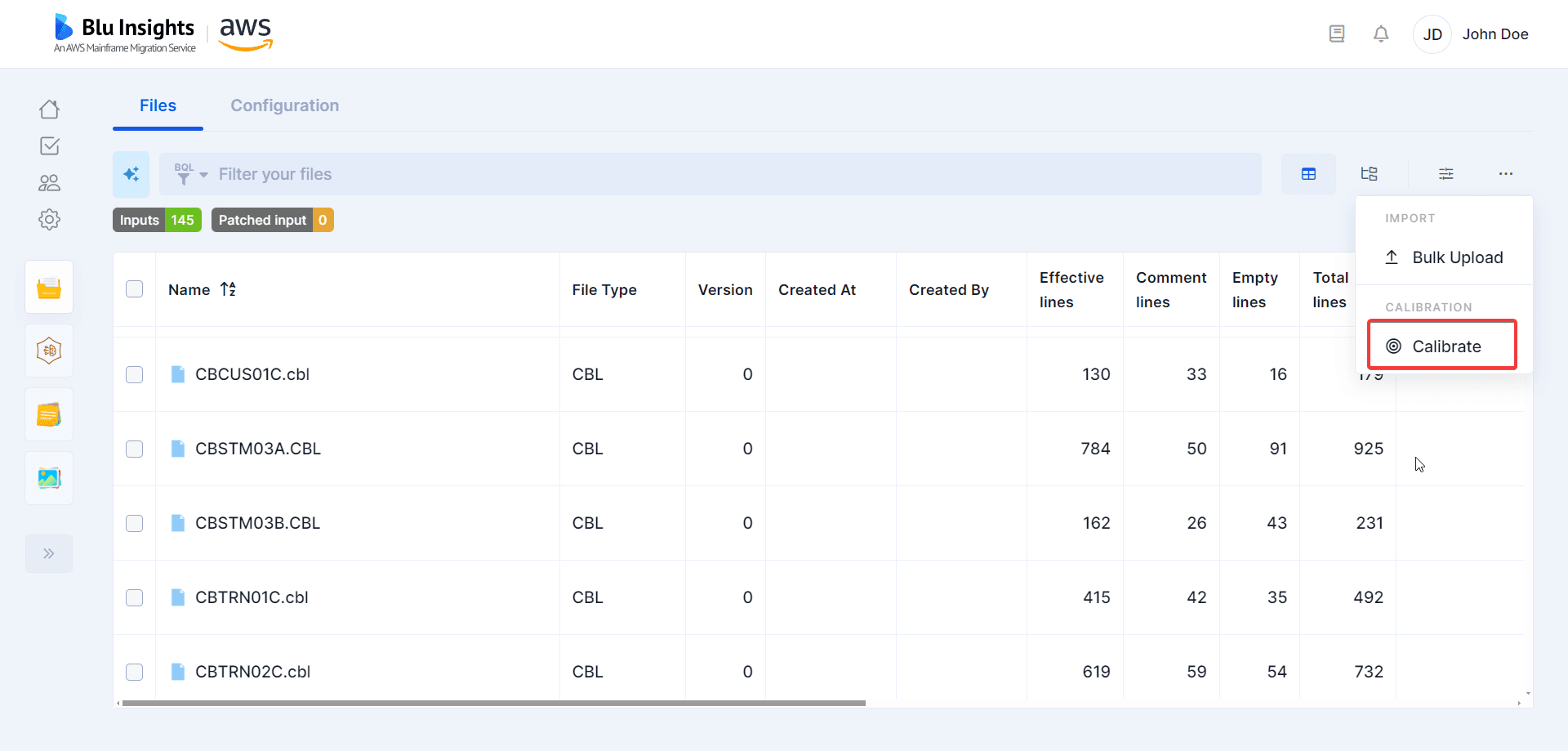

The entry point of this new feature “Calibrate” is located in a Transformation Center project, on the Inputs view (... menu).

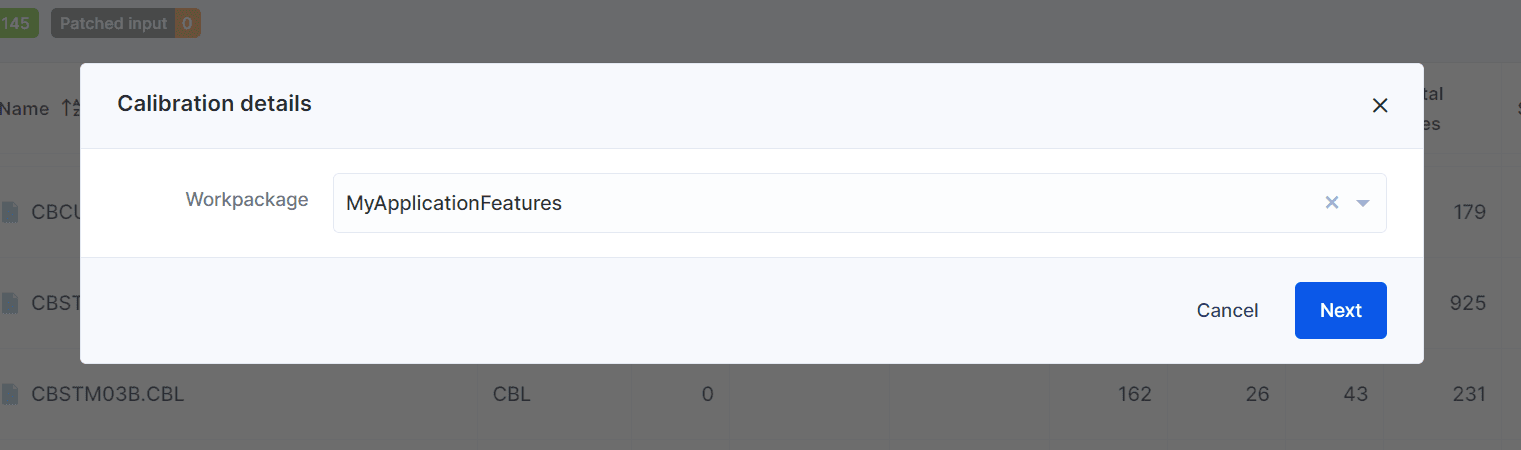

When launching a Calibration process, a parent Workpackage must be provided by the customer. This workpackage can result from the Application Features process, but can also be tailor made based on other inputs. Its children workpackages will be used to consolidate the calibration metrics of each artifacts composing them.

Calibration run

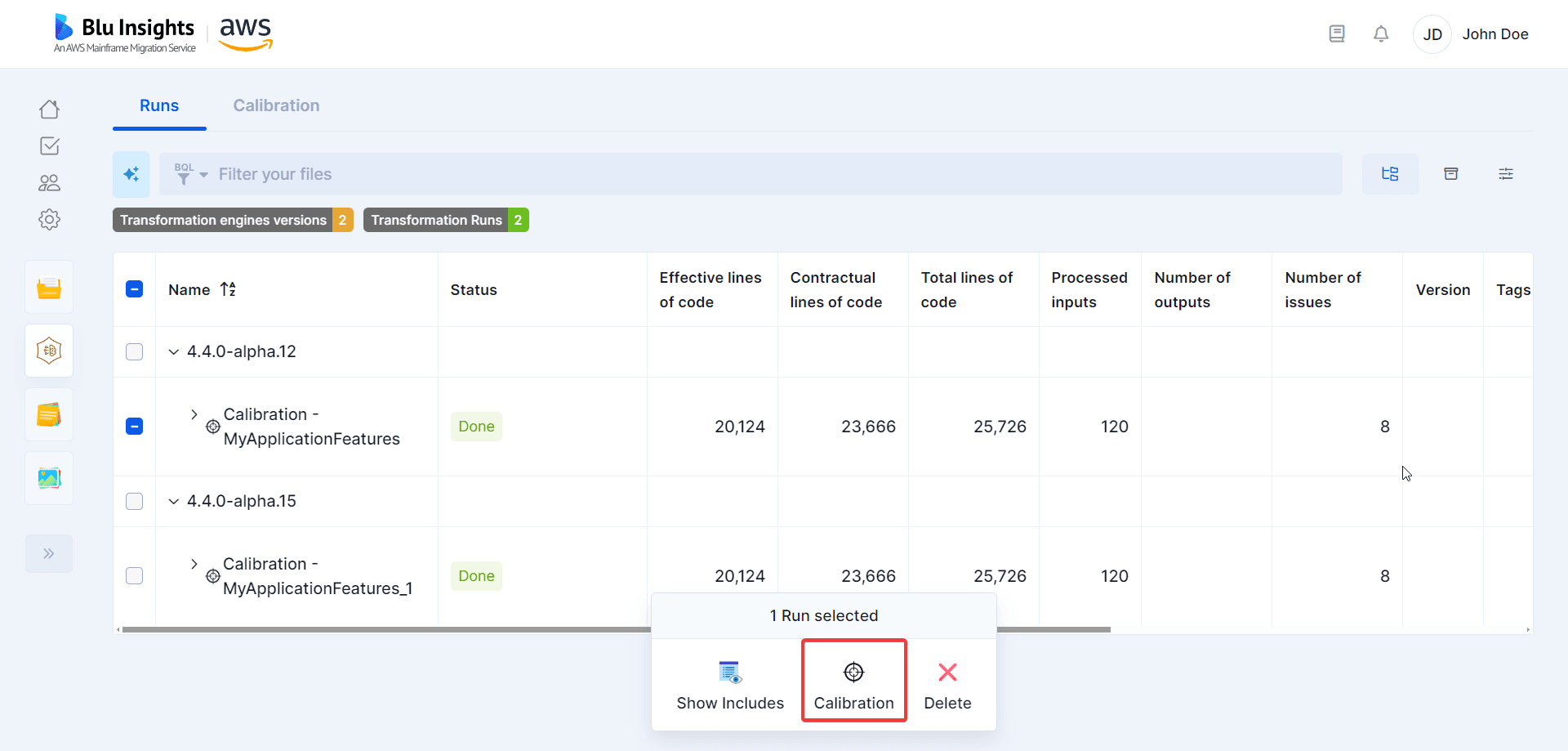

Once the Calibration Run has been created, it is accessible from the Transformation Runs view. By selecting it, a Calibration button is displayed on the bottom banner, which leads to a new Calibration view.

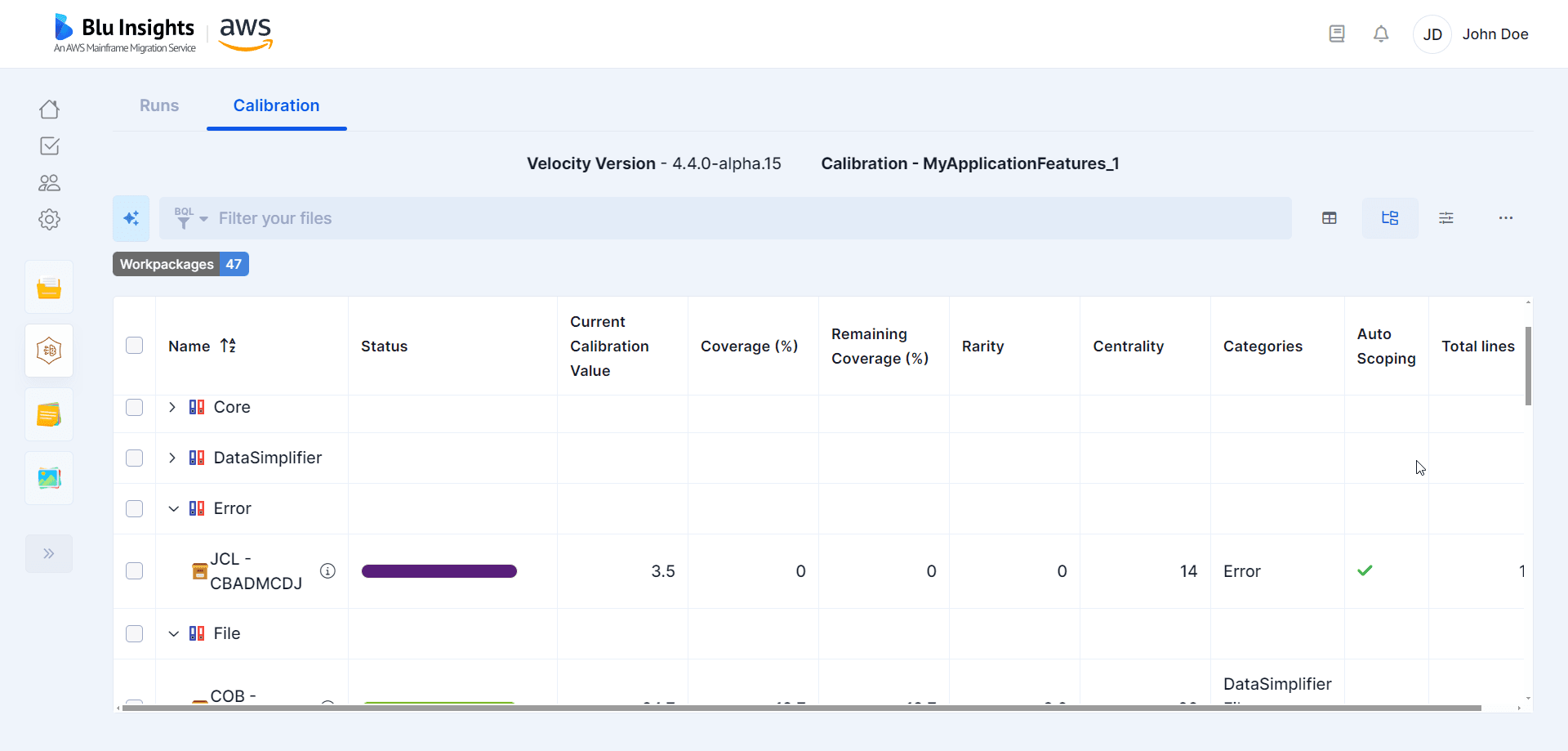

Calibration view

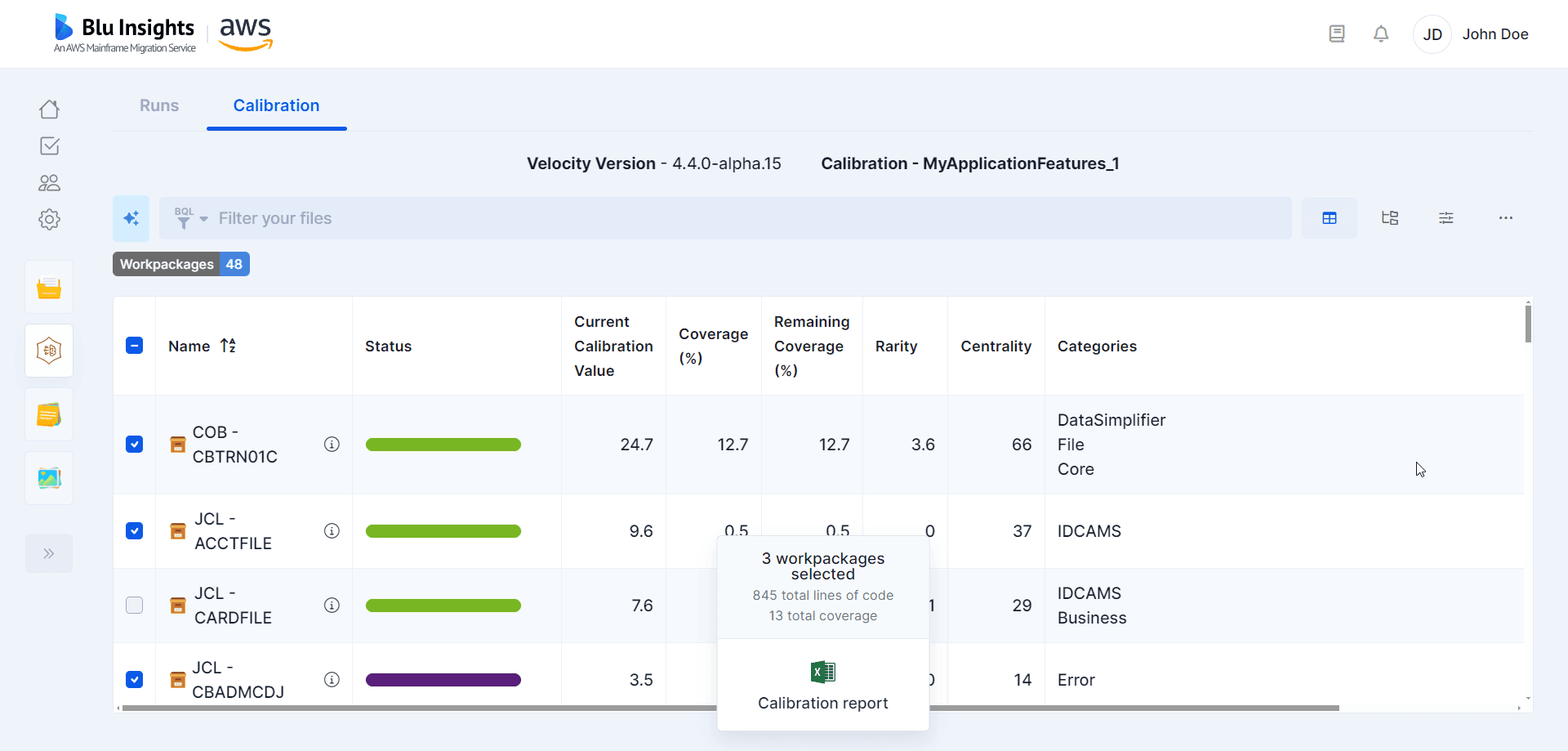

The main element of this new page is a table, on which each line represents a workpackage with all the metrics previously computed. The status of the Transformation for each workpackage is displayed as a status bar representing the percentage of success/warning files modernized within it. When selecting one or multiple workpackages, the coverage percentage and total included lines of code displayed on the bottom banner. Also, the remaining coverage and current calibration value for each workpackage are updated accordingly in the table by updating the columns associated with this metrics. All elements are gathered in this view to choose a calibration scope, by selecting high scored workpackages and looking at the different constraints and metrics (lines of code, remaining calibration value,...).

Categories view

Another view is available, which shows all the categories that were detected during the transformation. As for the previous view, the user can select workpackages, which will also be reflected in the previous view.

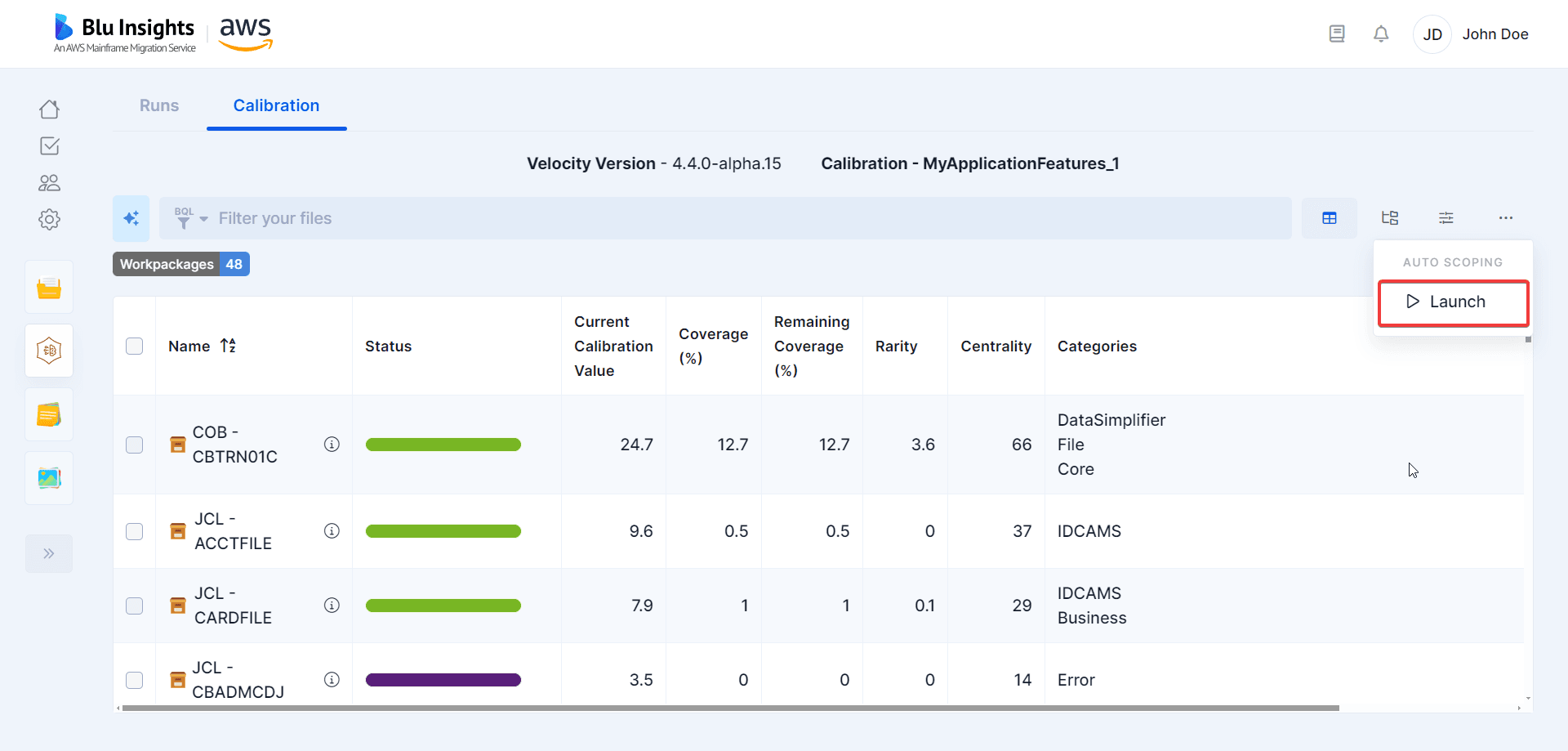

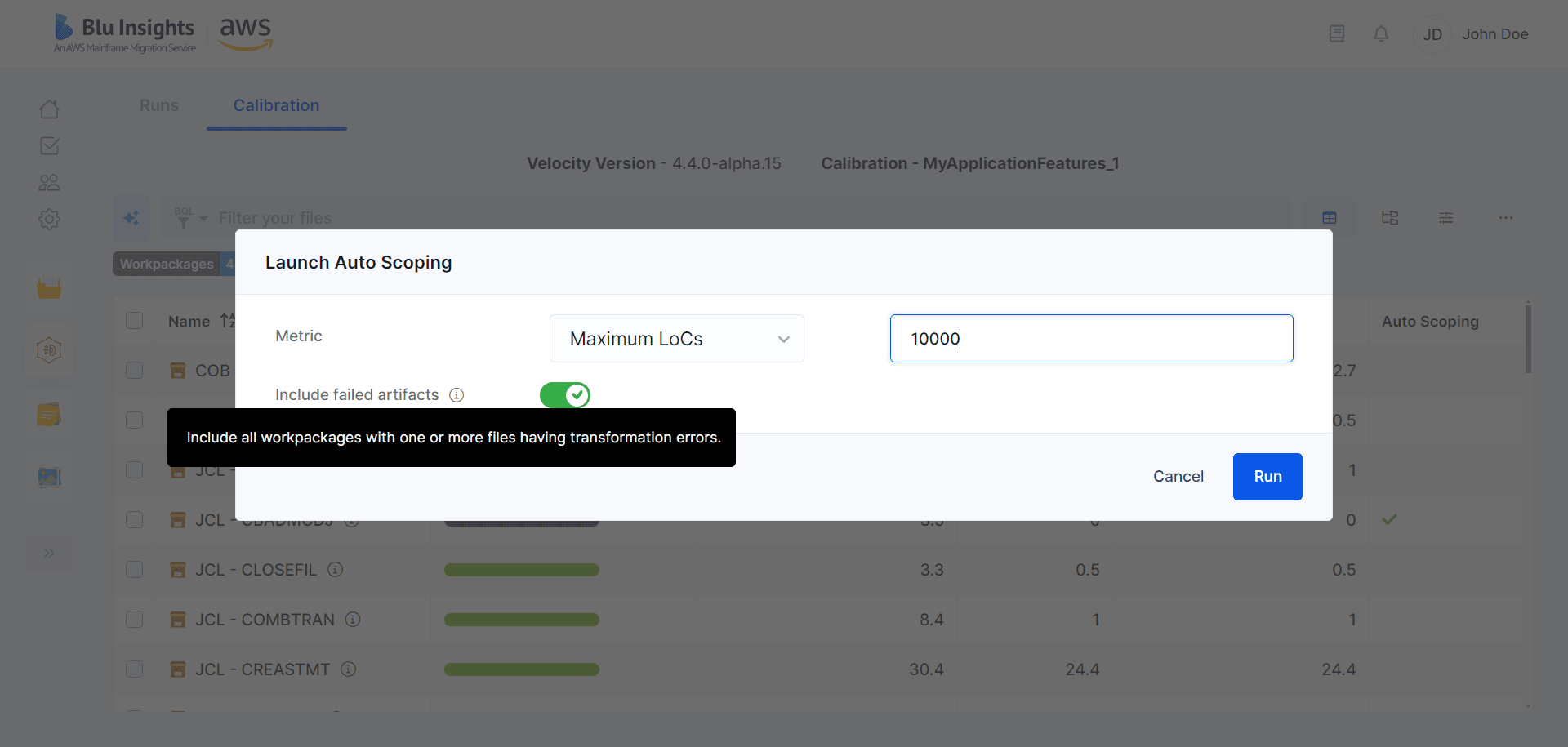

Auto scoping

To further help in choosing a calibration scope, we introduce the auto scoping process. This process takes the different metrics computed during the transformation step, as well as some restrictions provided by the user (maximum number of lines of codes or workpackages wanted in the calibration scope), and finds the most valuable combination of application features within those constraints. You can choose whether to automatically include workpackages that couldn't be modernized by AWS Blu Age Transformation Engines. These files are challenging, and as such are suitable candidates for a calibration scope, but the number of such artifacts drastically varies from project to project, and it can also be good to make the calibration choice without this constraint.

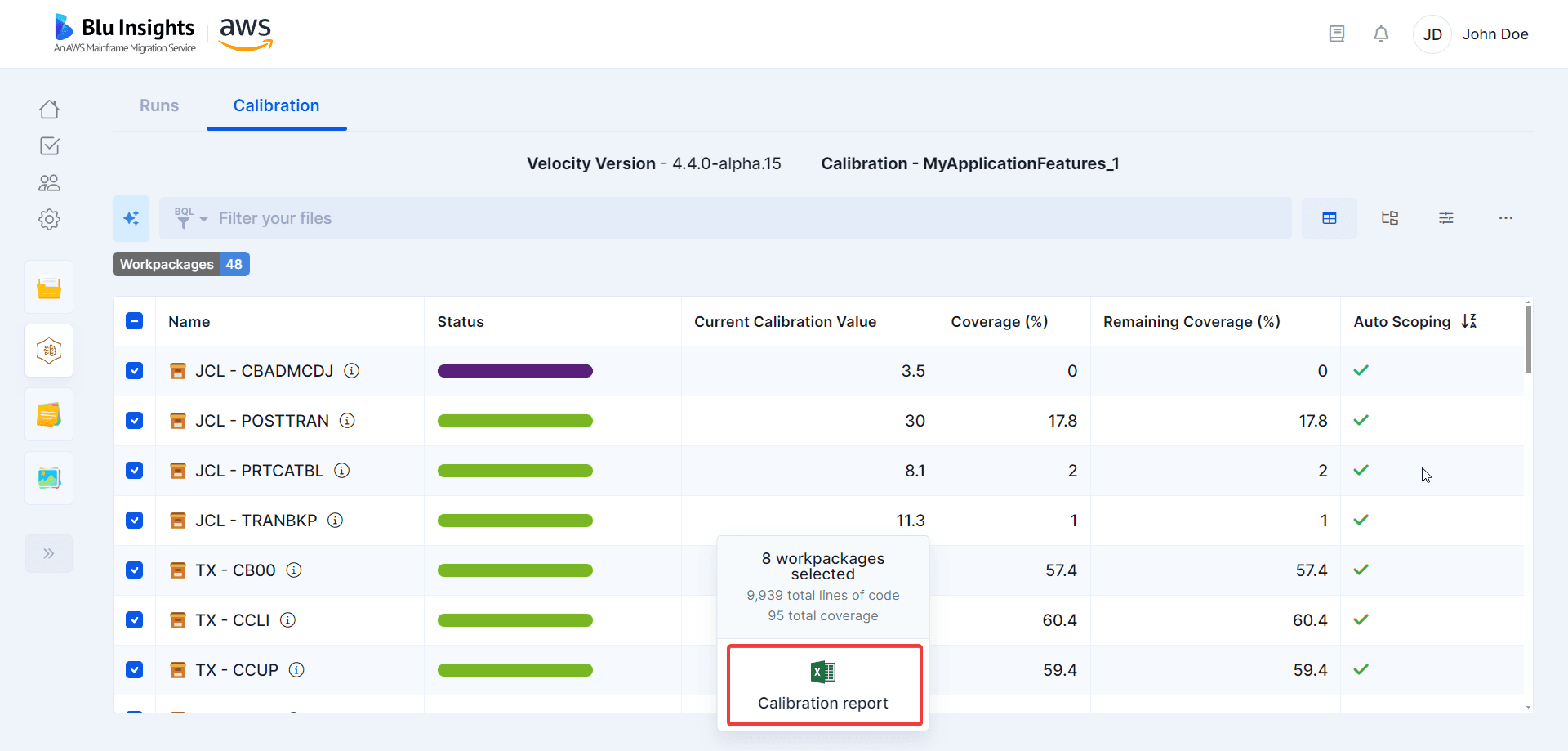

Calibration report

After selecting workpackages, whether manually or automatically with the auto scoping process, a calibration report can be exported. This report can then be used as an import excel within an AWS Blu Insights Codebase to consolidate the different workpackages into a Calibration scope and a Mass modernization scope.

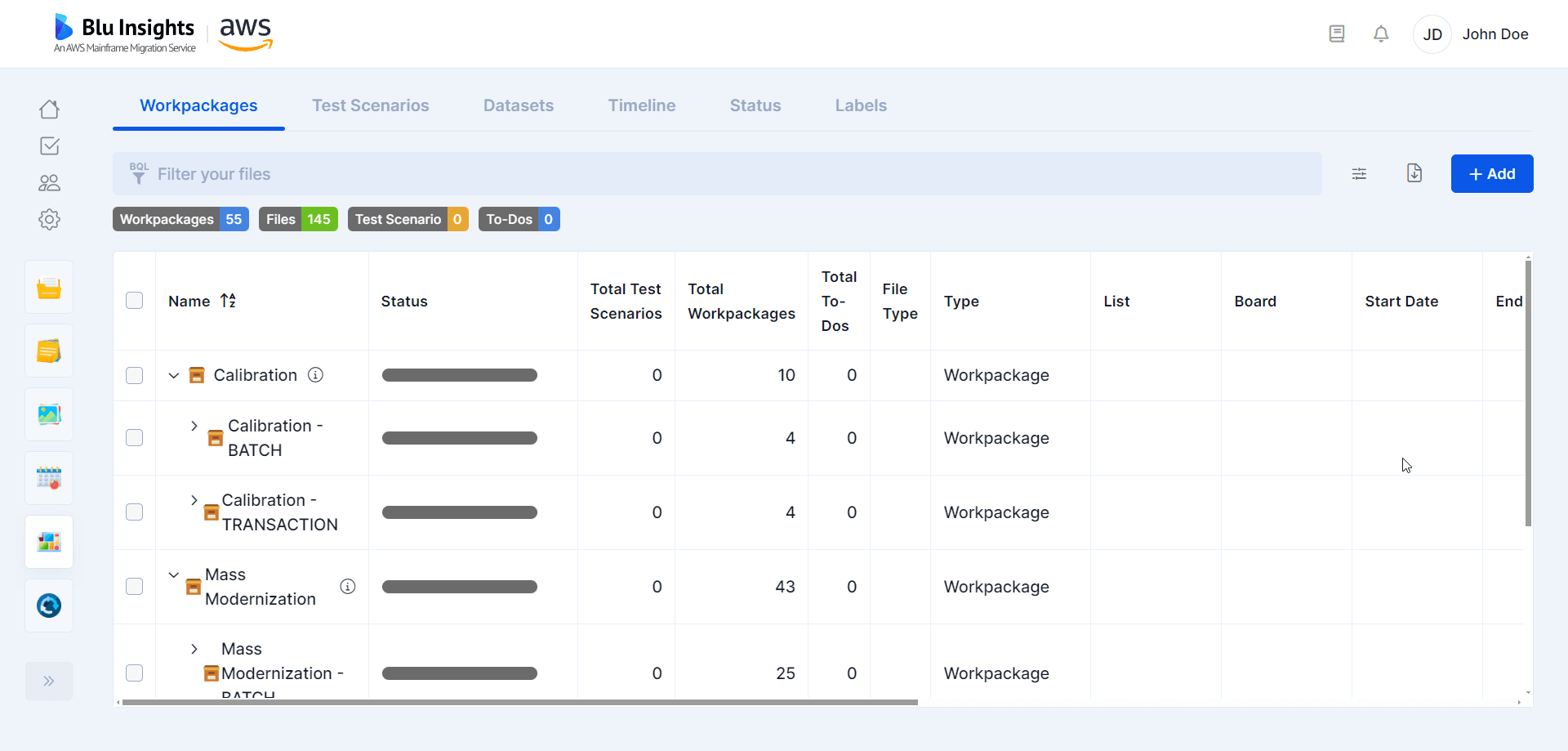

As an outcome, the codebase application has been divided and split into two parent workpackages: Calibration and Mass Modernization. The Calibration workpackage contains as children all Application Features selected by the previous assistant, and the Mass Modernization workpackage all other Application Features. The definition of this Calibration workpackage launches the next steps of the modernization journey with the customer. We can now present this result to the customer, with the previous calibration process validating our choices. This Calibration workpackages will define the test cases for the Calibration phase.

We would be happy to hear from you about this new feature.

Have a productive day!